As AI systems move closer to large-scale deployment across distributed infrastructures, ensuring their regulatory conformity becomes essential. Within ENACT, this mission is supported by the AI Act Compliance Checker, a tool designed to help organizations navigate the stringent requirements of the EU AI Act. By combining document intelligence, secure data handling, and a structured LLM-driven assessment workflow, the Compliance Checker transforms complex legal obligations into clear, traceable, and actionable compliance insights.

A Foundation for Compliance in Distributed AI Deployments

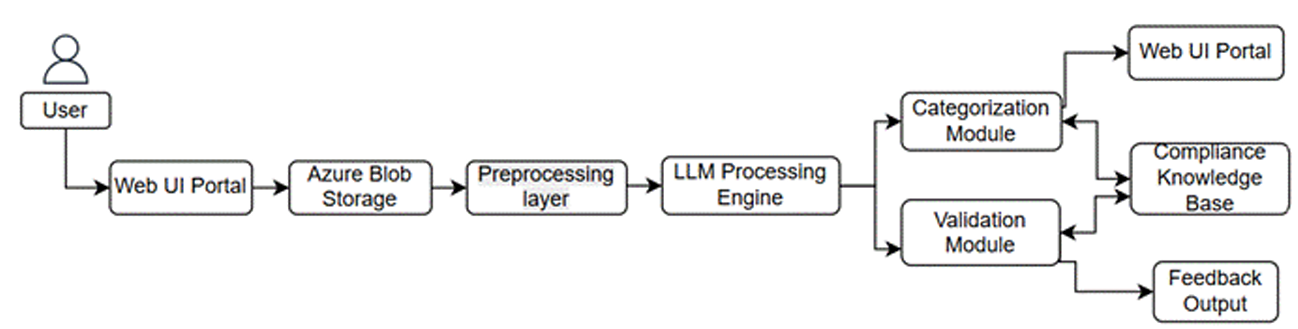

Main components of the AI Compliance Checker architecture

The Compliance Checker plays a critical role in identifying regulatory risks and determining whether an AI system falls into one of the Act’s predefined categories: prohibited, low risk, limited risk, high-risk, general-purpose, or general-purpose with systemic risk. Its primary purpose is to ensure that AI systems are correctly classified and that their documentation aligns with the AI Act’s mandatory requirements before they are deployed across the ENACT Cognitive Computing Continuum (CCC).

To support this classification process, the system must parse a wide range of documentation formats — .docx, .pdf, .pptx, and .txt — using document intelligence capabilities for text extraction and preprocessing. When visual content is involved, GPT-4 Vision is employed to extract and interpret images, ensuring that both textual and graphical information are included in the compliance evaluation.

All processed documents are stored temporarily in Azure Blob Storage, under tightly controlled access policies that enforce encryption, restricted availability, and automatic deletion following evaluation.

LLM-Driven Classification and Compliance Assessment

At the core of the Compliance Checker lies a Large Language Model (LLM) responsible for determining regulatory categorization and validating documentation. This model operates on structured legal definitions derived from the AI Act’s annexes and articles, which are dynamically provided through a prompt orchestration layer.

The resulting outputs are delivered in structured JSON format, including:

- Flags indicating category classification

- Validation summaries

- Traceable justifications for the decisions

These outputs are designed for consumption by other ENACT components, enabling compliance-aware deployment decisions across the broader infrastructure.

Although the tool is already available as a stand-alone web application, with fully operational APIs for classification and validation, integration with the rest of the ENACT architecture is planned for future development. This will allow AI systems across ENACT to surface compliance status in real time, supporting automated deployment, logging, and performance correlation.

Guided Workflows and Standardized Documentation

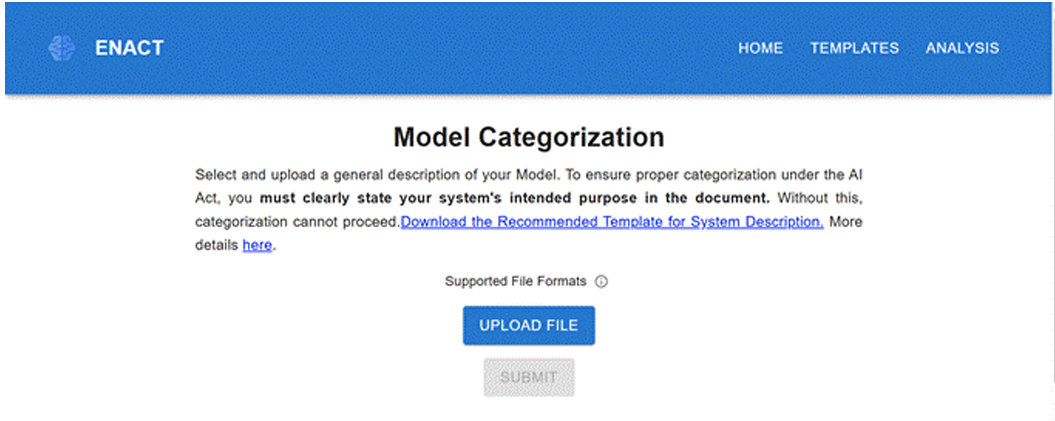

The Compliance Checker provides structured workflows that guide users in preparing and submitting the required documentation. To streamline conformity assessment, downloadable documentation templates are included within the web UI. These templates mirror the structures defined in the AI Act’s annexes, ensuring that user submissions are complete and properly aligned with legislative expectations.

Document placeholders are mapped to specific compliance requirements, enabling the LLM to perform automated validation by comparing each submission against the relevant legal criteria.

Security remains integral throughout this workflow: all files are encrypted during storage and transmission, and access to processing endpoints is strictly authenticated. At the end of each session, all uploaded materials are automatically deleted, safeguarding user privacy and organizational confidentiality.

A dedicated security audit is planned to validate the robustness of these measures prior to large-scale deployment.

Web UI Home page

Validation Mode: From Categorization to Compliance Assurance

Once an AI system is classified—especially if it falls under high-risk or general-purpose categories—the user is directed into Validation Mode. Here, the LLM evaluates each uploaded document against the specific regulatory criteria extracted from the Compliance Knowledge Base.

The system assesses:

- Completeness of documentation

- Alignment with required standards

- Relevance and conformity of submitted evidence

Structured feedback identifies either full compliance or gaps requiring revision. These results are presented through the frontend, with direct traceability to the corresponding AI Act requirements.

Use Cases and Operational Deployment

The Compliance Checker primarily supports AI developers, providers, and compliance officers who must ensure regulatory compliance before market deployment. Users can classify their systems, upload required documents, and validate submissions according to Annex IV (high-risk) or Annex XI (general-purpose) requirements.

Currently deployed as a stand-alone web application, the tool includes:

- A secure web frontend

- Azure Blob Storage for temporary document handling

- A preprocessing layer that standardizes inputs

- An LLM-driven categorization and validation engine

- A structured compliance knowledge base

Future integration may expand its role within broader compliance management ecosystems or orchestration frameworks.

Additional beneficiaries include internal compliance teams, technology vendors, R&D institutions, and public-sector procurement bodies, all of whom can leverage the tool to assess regulatory alignment early and efficiently.

With its combination of secure document handling, structured workflows, and LLM-powered compliance intelligence, the AI Act Compliance Checker provides a vital bridge between technical development and regulatory responsibility—offering a clear pathway toward trustworthy, legally aligned AI deployment within ENACT and beyond.